Download

Videos at ACM MM'2022

Analysis

GitHub Repo

|

|

|

|

|

|

|

|

|

|

|

|

Download |

Videos at ACM MM'2022 |

Analysis |

GitHub Repo |

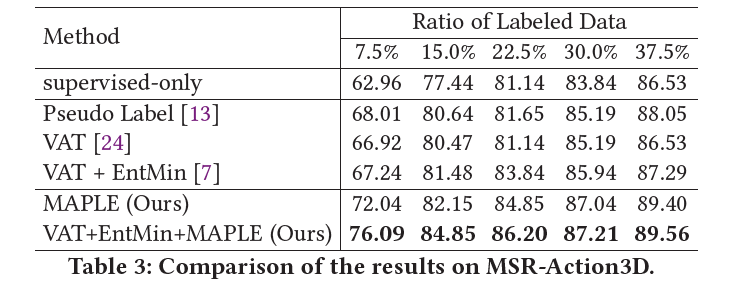

MSR-Action3D |

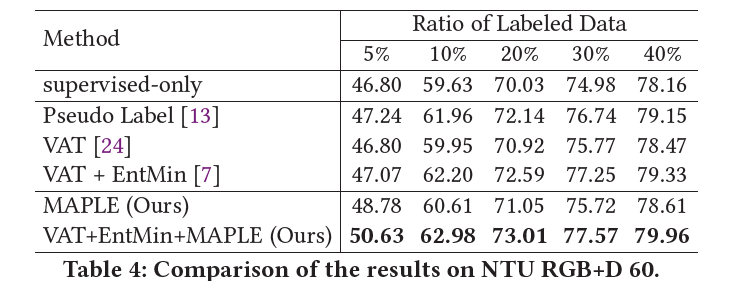

NTU RGB+D 60 |

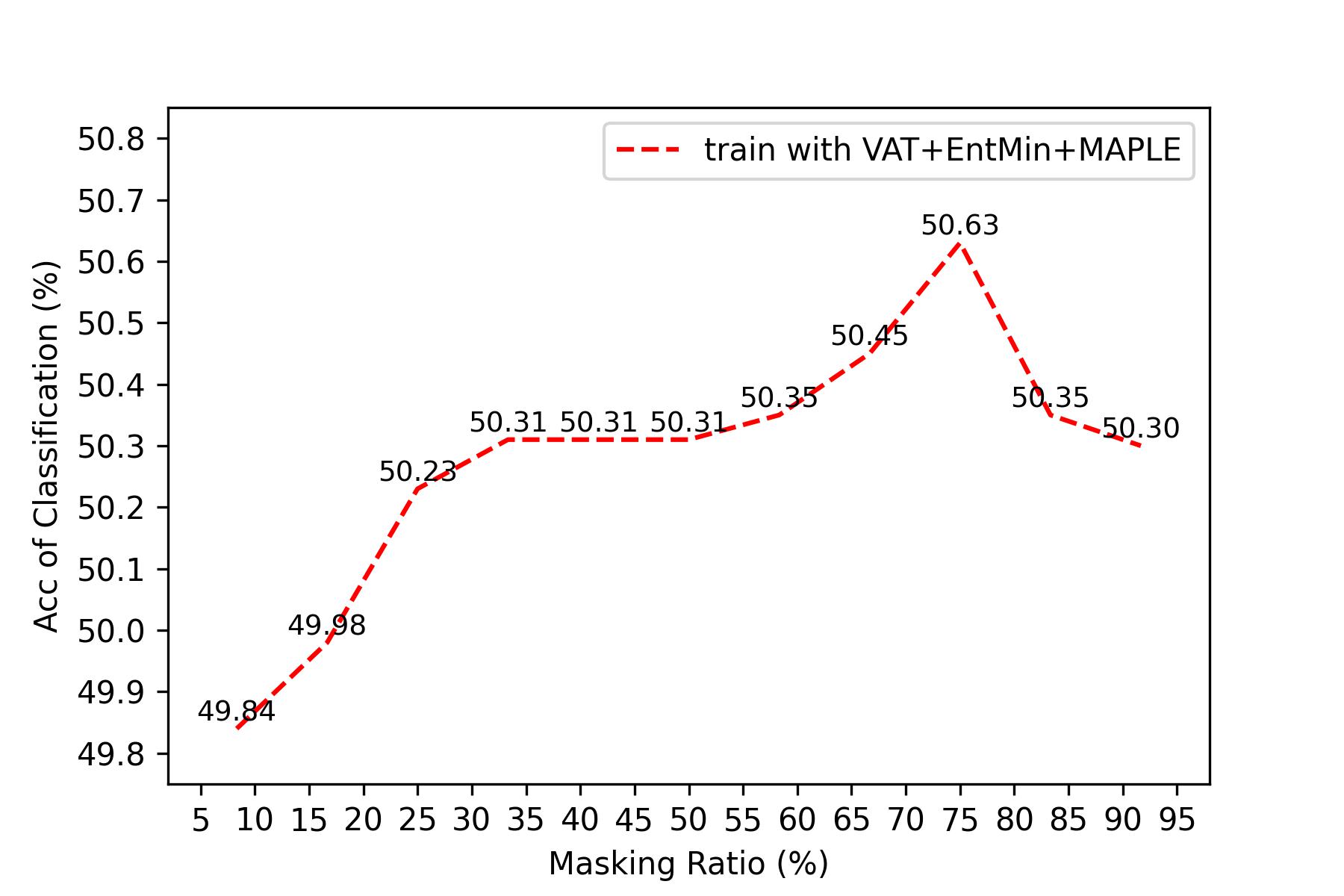

The accuracy of classification on NTU RGB+D 60 5% labeled dataset with different masking ratios. |

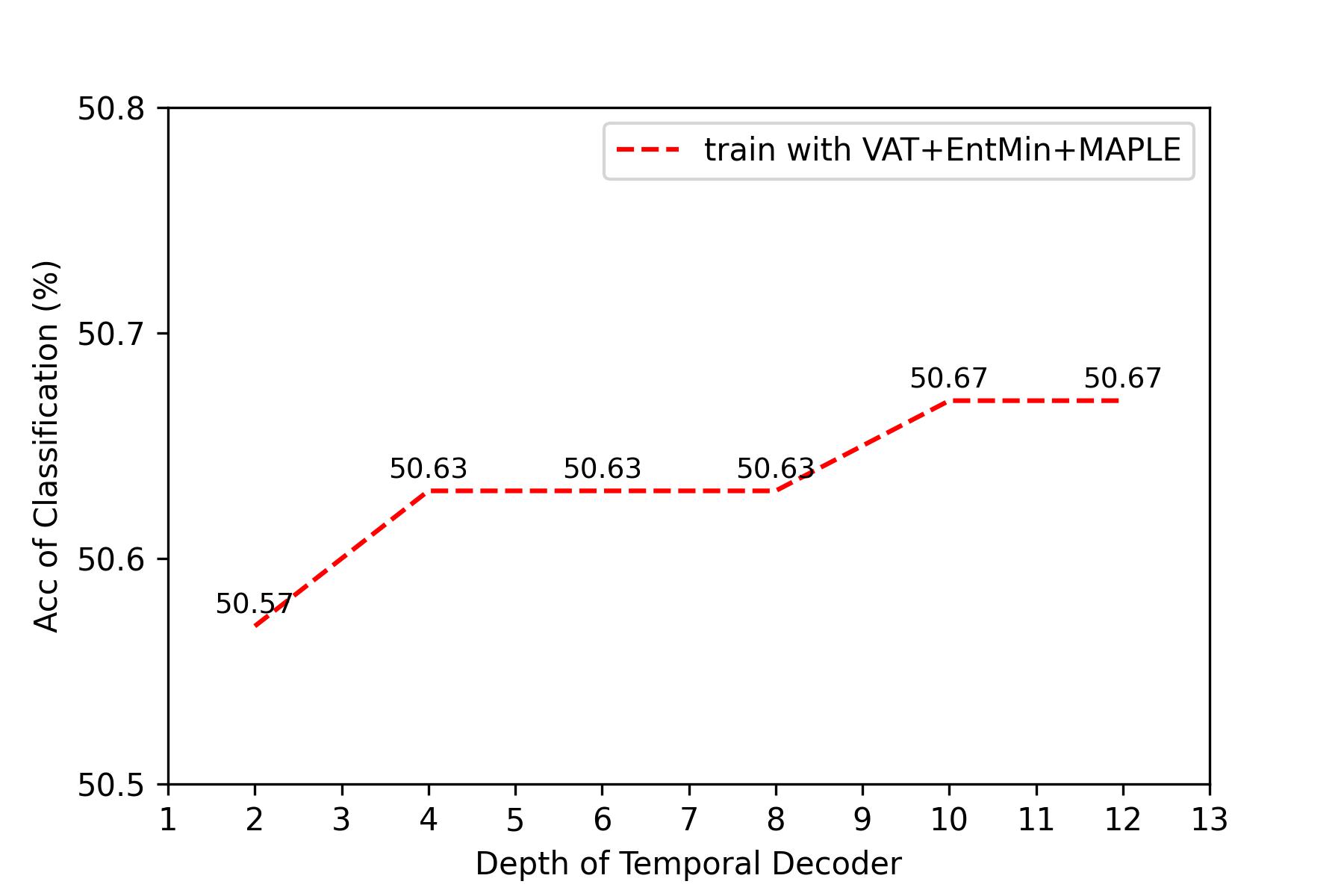

The accuracy of classification on NTU RGB+D 60 5% labeled dataset with different depth of temporal decoder. |

|

MSR-Action3D NTU RGB+D 60 NTU RGB+D 120 |

|

Chen, Liu, Liu, Zhang, Zhang, Han, Mei. MAPLE: Masked Pseudo-Labeling autoEncoder for Semi-supervised Point Cloud Action Recognition In ACM MM, 2022 (Poster). (arXiv) |

AcknowledgementsThis work was done when Xiaodong Chen was an intern at JD AI Research. |

Contact |